#LOCAL DYNAMODB WINDOWS MAC#

Below are screen shots of the Mac and Windows / Linux versions of the SQLite table editor.

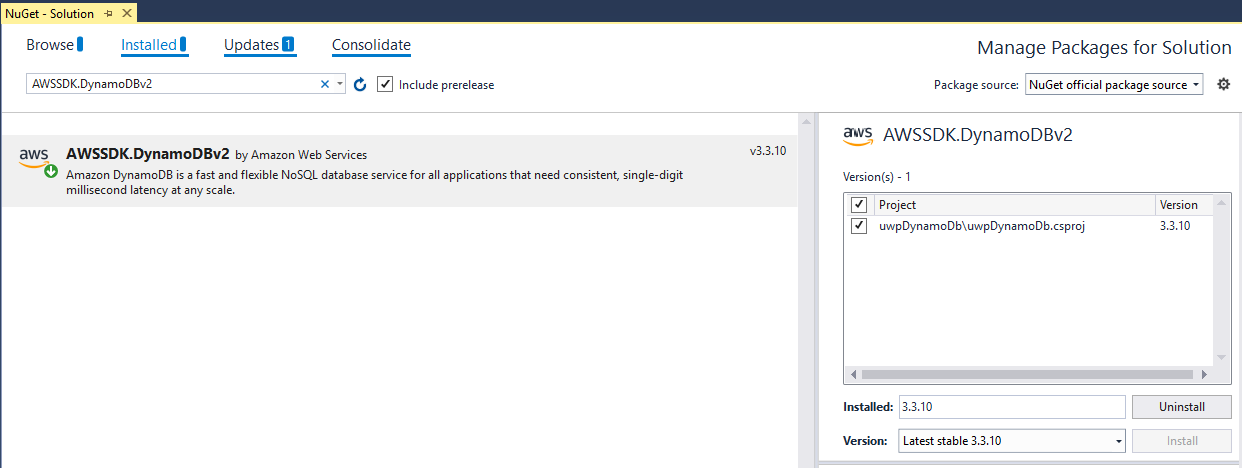

Displays information in a tree format for databases, tables, and attributes. Rake imports all test classes starting from test_helper.rb A DynamoDB Export Tool for exporting data from tables in various formats such as delimited files, Excel spreadsheets, HTML, XML, SQL insert statements, and text.Rake waits for DynamoDB Local availability on the reserved port.Maven starts DynamoDB Local, binding it to the reserved TCP port.spawn ( 'mvn', 'install', chdir: 'dynamodb-local' ) at_exit do `kill -TERM # and key/secret pair info rm_rf ( 'dynamodb-local/target' ) pid = Process. You will need to add pom.xml to your repository and start/stop Maven from a Rakefile, just like I’m doing here: task :dynamo do FileUtils. To make things simpler I suggest you use jcabi-dynamodb-maven-plugin, a Maven plugin that I made a few years ago. You need to start it before your integration tests and stop it afterwards.

#LOCAL DYNAMODB WINDOWS HOW TO#

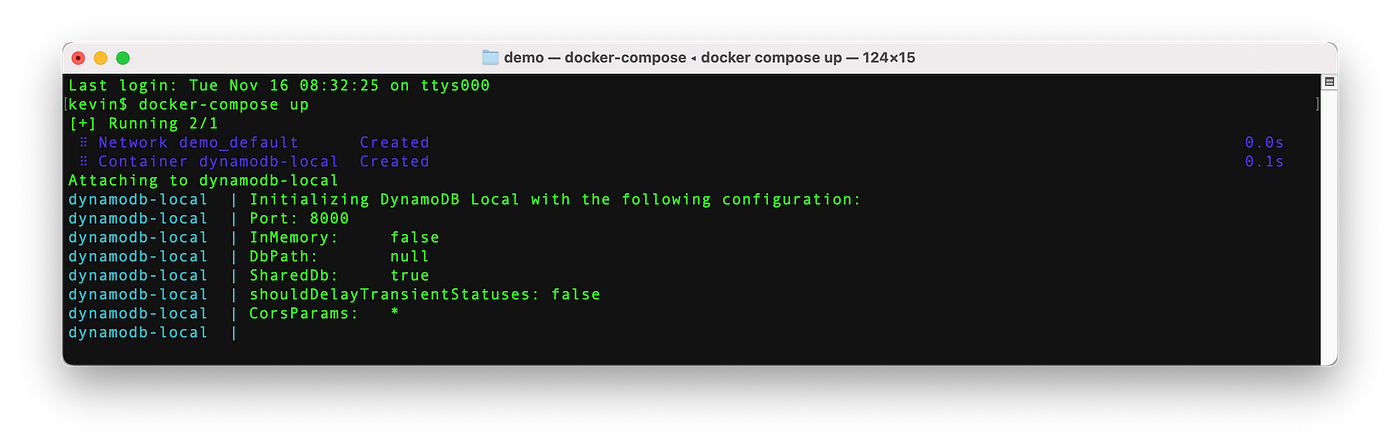

How to bootstrap DynamoDB Localįirst, you need to use DynamoDB Local, a command line tool created by AWS exactly for the purposes of testing.

#LOCAL DYNAMODB WINDOWS CODE#

The code is open source and you can see it in the yegor256/sixnines GitHub repo. It works like a charm, but the problem is that it’s not so easy to create an integration test, to make sure my code works together with the “real” DynamoDB server and tables. I tried various ways to detect that the process was really dead but none worked so settled for a sleep.In SixNines.io, one of my Ruby pet web apps, I’m using DynamoDB, a NoSQL cloud database by AWS. Then later when any operation was performed it would just hang until Mocha timed-out the Unit Test/before handler. This meant that the before() function in the next set of tests would succesfully connect to the dying instance of dynamoDB. I found when I had multiple test files, each with their own begin() & after() functions that did the same as these, even though kill had purported to have killed the processed (I checked the killed flag) it seemed the process hadn't died immediately. The important point to note here is the use of the sleep package & function. Here the local instance of dynamoDB is spawned using the child_process package and reference to the process retained. This is handled in the begin() & after() functions. Rather than attempting to create & destroy the dB for each Unit Test I settled with creating it once per Unit Test file. This shows the tests.īefore()/after() - creating/destroying dynamoDB I'm going to jump ahead and show the completed Unit Test file and explain the various things I had to do in order to get it working. ResourceNotFoundException: Cannot do operations on a non-existent table This meant the UT using the table often failed with:ġ) awsLambdaToTest The querying an empty db for a user Returns: Whilst the documentation for local dynamoDB says that one of the differences between the AWS hosted & the local versions is that CreateTable completes immediately it seems that the function does indeed complete immediately the table isn't immediately available.

The problem I had with this approach is that in addition to creating the dB each time I also needed to create a table. The important points here are that the '-inMemory' option is used meaning that when the dB instance is killed and another re-started everything is effectively wiped without having to do everything. This uses the child_process npm package to create an instance before each test, store the handle to the process in local-ish variable and following the tasks just kill it. In order to run this locally you'll need: I would use async and await but the latest version of Node that AWS Lambda supports doesn't support them :-(

0 kommentar(er)

0 kommentar(er)